The key developer resources to use in your application like the KinectWpfViewers that ship Kinect SDK Kinect Explorer sample and the Coding4Fun Toolkit for skeletal scaling that will be used in. I am also a beginner. Actually I just got the kinect yesterday and encountered the same problem as you. The sdk I installed is v1.8. Here is how I checked everything and solved it: Look into the device. Kinect Developer Toolkit V1 8 0 Setup. By Erika Dwi Posted on October 26, 2018 Category. Kinect开发环境配置 Kinect For Windows Sdk V 1 8 Opencv2 4 9. I have followed your instructions for SDK v 1.7 and v 1.8 using the correct drivers. I connect the Kinect as directed. And that is where the problems start. The Green light is flashing 2. When I start Kinect Studio v 1.8, I am told that I need to be connected to a Kinect.

People often have difficulty getting started with Kinect programming. This VS 2012 project template for WPF and C# stubs out many standard patterns developed over the months since the Kinect SDK was first released including setting up stream event handlers, deallocating streams and closing the sensor, gracefully handling situations like accidentally unplugging the Kinect sensor.

This project template will work with both the Kinect 4 Windows sensor as well as the standard Xbox Kinect sensor for development mode.

The template additionally includes extension methods for the Kinect Sensor object that makes it easy to create a green screen (background subtraction) image, a color blob image, or draw a player skeleton.

These are provided to make it easier to replicate the most common visualization scenarios for presentations or quick prototypes. The extension methods are based on the ones I originally wrote for the Apress bookBeginning Kinect Programming with the Microsoft Kinect SDK.

A deeper discussion of the capabilities of this project template can be found here.

Following are some key features:

1. Initialization Code

All the initialization code and Kinect stream event handlers are stubbed out in theInitSensor method. All you need to do is uncomment the streams you want to use. Additionally, the event handler code is also stubbed out with the proper pattern for opening and disposing of frame objects. Whatever you need to do with the image, depth and skeleton frames can be done inside those using statements. This code also uses the latest agreed upon best practices for efficiently managing streamed data as of the 1.7 SDK.

2. Disposal Code

Whatever you enable in the InitSensor method you will need to disable and dispose of in theDeInitSensor method. Again, this just requires uncommenting the appropriate lines. TheDeInitSensor also implements a disposal pattern that is somewhat popular now. The sensor is actually shut down on a background thread rather than on the main thread. I’m not sure if this is a best practice as such, but it resolves a problem many C# developers were running into in shutting down their Kinect-enabled applications.

3. Status Changed Code

The Kinect can actually be disconnected in mid-process or simply not be on when you first run an application. It is also surprisingly common to forget to plug the Kinect’s power supply in. Generally, your application will just crash in such situations. If you properly handle the KinectSensors.StatusChanged event, however, your application will just start up again when you get the sensor plugged back in. A pattern for doing this was first introduced in the KinectChooser component in the Developer Toolkit. A lightweight version of this pattern is included in the Kinect Application Project Template.

4. Extension Methods

While most people were working on controls for the Kinect 4 Windows SDK, Clint Rutkas and the Coding4Fun guys brilliantly came up with the idea of developing extension methods for handling the various Kinect streams.

The extension methods included with this template provide lots of conversions from bitmaps byte arrays toBitmapSource types (useful for WPF image controls) and vice-versa. This allows you to do something easy like display a color stream which otherwise can be rather hairy. The snippet below assumes there is an image control in theMainWindow named canvas.

More in line with the original Coding4Fun Toolkit, the extension methods also make some very difficult scenarios trivial – for instance background subtraction (also known as green screening), skeleton drawing, player masking. These methods should make it easier for quickly mock up a demo or even show off the power of the Kinect in the middle of a presentation using just a few lines of code.

This release introduces support for the Kinect for Windows v2 sensor, and introduces a broad range of capabilities for developers. The Kinect for Windows SDK 2.0 includes the following:

- Drivers for using Kinect v2 sensors on a computer running Windows 8 (x64), Windows 8.1 (x64), and Windows Embedded Standard 8 (x64)

- Application programming interfaces (APIs) and device interfaces

- Code samples

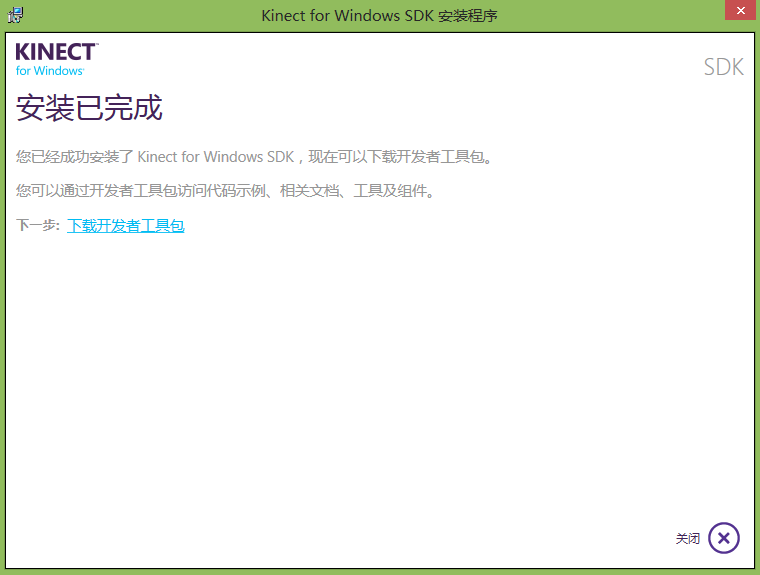

To install the Kinect for Windows SDK 2.0:

- Make sure the Kinect sensor is not plugged into any of the USB ports on the computer.

- From the download location, double-click on KinectSDK-v2.0_1409-Setup.exe

- Once the Kinect for Windows SDK has completed installing successfully, ensure the Kinect sensor is connected to the power hub and the power hub is plugged into an outlet. Plug the USB cable from the power hub into a USB 3.0 port on your computer. Driver installation will begin automatically.

- Wait for driver installation to complete. You can verify that installation has completed by launching Device Manager and verifying that 'KinectSensor Device' exists in the device list. Note:On first plugin, the firmware on the device will be updated. This may result in the device enumeration happening several times in the first minute.

- Installation is now complete.

What's New:

Windows Store Support

With this release of Kinect for Windows, you are able to develop and publish Kinect enabled applications which target the Windows Store. We are incredibly excited to see what people create. All of the Kinect SDK and sensor functionality are available in this API surface, except for Speech. For more information about developing Windows Store applications which use Kinect, please see: https://go.microsoft.com/fwlink/?LinkId=517592.

Unity Support

For the first time, the Kinect API set is available in Unity Pro, through a Unity Package. We are excited to be able to offer the platform to our developers. APIs for Kinect for Windows core functionality, visual gesture builder and face are now available to be called from Unity apps. The Unity plugins are available for download at: https://go.microsoft.com/fwlink/?LinkID=513177

.NET APIs

The Managed API set should feel familiar to developers who worked with our managed APIs in the past. We know this is one of the fastest development environments available, and that many development shops have an existing investment in this space. All of the Kinect SDK and sensor functionality are available in this API surface.

Native APIs

Many Kinect applications require the full power and speed that writing native C++ code requires. We are excited to share this iteration of the native APIs for Kinect. The form and structure of the APIs is identical to the Managed API set, but allow a developer to access the full speed of C++. These APIs are a significant divergence from the v1.x native APIs, and should be significantly easier to use. All of the Kinect SDK and sensor functionality are available in this API surface.

Audio

The Kinect sensor and SDK provide a best in class array microphone and advanced signal processing to create a virtual, software based microphone which is highly directional, and which can understand the direction sounds are coming from. In addition, this provides a very high quality input for Speech recognition.

Face APIs

Extended massively from v1, the Face APIs provide a wide variety of functionality to enable rich face experiences and scenarios. Within the Face APIs, developers will be able to detect faces in view of the sensor, align them to 5 unique facial identifiers, and track orientation in real-time. With HD Face, the technology provides 94 unique 'shape units' per face, to create meshes with a very rich likeness to the user. The meshes can be tracked in real-time to show rich expressiveness and live tracking of the user's facial movements and expressions.

Kinect for Windows v2 Hand Pointer Gestures Support

If you would like to enable your applications to be controls through hand pointer gestures, Kinect for Windows v2 has improved support. See ControlsBasics-XAML, ControlsBasics-WPF and ControlsBasics-DX for examples of how to hand pointer gesture enable your applications. This is an evolution of the KinectRegion/KinectUserViewer support that we provided in Kinect for Windows v1.7 and later. KinectRegion and KinectUserViewer are available for XAML and WPF applications. The DirectX support is built on top of a lower level Toolkit Input component.

Kinect Fusion

With this release of Kinect for Windows, you are able develop and deploy Kinect Fusion applications. This provides higher resolution, better camera tracking and performance than the 1.x releases of Kinect Fusion.

Kinect Studio

Kinect Studio has had a major rewrite since the v1 days, in order to handle the new sensor, and to provide users with more customization and control. The new user-interface offers flexibility in the layout of various workspaces and customization of the different views. It is now possible e.g. to compare two 2D or 3D views side-by-side or to create a custom layout to meet your needs. The separation of the monitoring, recording and playback streams exposes additional functionality such as file- and stream-level metadata. The timeline features: in- and out-points to control what portion of the playback to play; pause-points that let you set multiple points at which to suspend a playback; markers, that let you attach meta-data to various points in time. This preview also exposes playback looping and additional 2D/3D visualization settings. There is still some 'placeholder' artwork here and there, but the tooltips should guide you along.

Visual Gesture Builder (Preview)

Kinect Developer Toolkit V1.8

Introducing Visual Gesture Builder, a gesture detector builder that uses machine-learning and body-frame data to 'define' a gesture. Multiple body-data clips are marked (aka 'tagged') with metadata about the gesture which is then used by a machine-learning trainer during the build step to extract a gesture definition from the body-data clips. The gesture definition can subsequently be used by the gesture detection runtime - called by your application - to detect one or more gestures. While using machine-learning for gesture detection is not for the faint of heart, it offers a path to rapid prototyping. Using vgbview, you can benchmark your gesture definitions without requiring that you write any code. For detailed walkthrough videos and a whitepaper on using VGB, please see the resources at: https://social.msdn.microsoft.com/Forums/en-US/02e0302a-e3bd-46d3-9146-0dacd11d2a8d/deep-dive-videos-and-whitepaper-for-visual-gesture-builder?forum=kinectv2sdk.