C# (CSharp) SolrNet.Schema SolrSchema - 13 examples found. These are the top rated real world C# (CSharp) examples of SolrNet.Schema.SolrSchema extracted from open source projects. You can rate examples to help us improve the quality of examples. As part of a larger.NET proof-of-concept project I needed to query a remote Solr server protected by HTTP Basic Authentication.Some quick searching uncovered some helpful SolrNet resources (which I have listed below), however, to provide some future searchers with some additional detail I am documenting exactly how I got it working here for reference.

-->

April 2019

Volume 34 Number 4

[.NET]

By Xavier Morera

Solrnet Cloud Example

You probably take search for granted. You use it daily to perform all kinds of tasks, from discovering a room for your next trip, to locating information you need for your job. A properly implemented search can help you save money—or make money. And even in a great app, a bad search still creates an unsatisfactory UX.

The problem is that despite its importance, search is one of the most misunderstood functionalities in IT, noticed only when missing or broken. But search doesn’t need to be an obscure feature that’s difficult to implement. In this article I’m going to explain how you can develop an enterprise search API in C#.

To learn about search, you need a search engine. There are many options, from open source to commercial and everything in between, many of which internally leverage Lucene—the information retrieval library of choice. This includes Azure Search, ElasticSearch and Solr. Today I’ll use Solr. Why? It has been around for a long time, has good documentation, a vibrant community, many notable users, and I’ve personally used it to implement search in many applications, from small sites to large corporations.

Getting Solr

Installing a search engine may sound like a complicated task and, indeed, if you’re setting up a production Solr instance that needs to support a high number of queries per second (QPS), then yes, it can be complicated. (QPS is a common metric used to refer to the search workload.)

Those steps are covered at length in the “Installing Solr” section of the Solr documentation (lucene.apache.org/solr), as well as in “The Well-Configured Solr Instance” section in the “Apache Solr Reference Guide” (bit.ly/2IK7mqY). But I just need a development Solr instance, so I simply go to bit.ly/2tEXqoo and get a binary release; solr-7.7.0.zip will work.

I download the .zip file, unzip, open the command line and change the directory to the root of the folder where I unzipped the file. Then I issue the following command:

That’s it. I just need to navigate to http://localhost:8983, where I’m greeted with the Solr Admin UI, shown in Figure 1.

Figure 1 A Search Engine Up and Running

Getting Data

Next, I need data. There are plenty of datasets available with interesting data, but every day thousands of developers end up at StackOverflow because they can’t quite remember how to write to a file; they can’t exit VIM; or they need a C# snippet that solves a particular problem. The good news is that the StackOverflow data is available as an XML dump containing about 10 million questions and answers, tags, badges, anonymized user info, and more (bit.ly/1GsHll6).

Even better, I can select a dataset with the same format from a smaller StackExchange site containing just a few thousand questions. I can test with the smaller dataset first, and later beef up the infrastructure to work with more data.

I’ll start with the data from datascience.stackexchange.com, which contains about 25 thousand posts. The file is named datascience.stackexchange.com.7z. I download it and extract Posts.xml.

A Few Required Concepts

I have a search engine and data, so it’s a good time to review some of the important concepts. If you’re used to working with relational databases, they’ll likely be familiar.

The index is where a search engine stores all the data that’s collected for searching. At a high level, Solr stores data in what’s called an inverted index. In an inverted index, words (or tokens) point to specific documents. When you search for a particular word, that’s a query. If the word is found (a hit or a match), the index will indicate which documents contain this word and where.

The schema is how you specify the structure of the data. Each record is called a document, and just as you’d define the columns of a database, you specify the fields of a document in a search engine.

You construct the schema by editing an XML file called schema.xml, defining the fields with their types. However, you can also use dynamic fields, in which a new field is created automatically when an unknown field is added. This can be useful for when you’re not totally sure of all the fields that are present in the data.

It may have already occurred to you that Solr is quite similar to a NoSQL document store, as it can contain documents with fields that are denormalized and not necessarily consistent across the collection of documents.

Schemaless mode is another way of modeling the data within the index. In this case, you don’t explicitly tell Solr which fields you’ll be indexing. Instead, you simply add data and Solr constructs a schema based on the type of data being added to the index. To use schemaless mode, you must have a managed schema, which means you can’t manually edit fields. This is especially useful for a data exploration phase or proof-of-concept.

I’ll use a manually edited schema, which is the recommended approach for production as it yields greater control.

Indexing is the process of adding data to the index. During indexing, an application reads from different sources and prepares data for ingestion. That’s the word used for adding documents; you might also hear the term feeding.

Once the documents are indexed, it’s possible to search, hopefully returning the documents most relevant to a particular search in the top positions.

Relevancy ranking is how the most appropriate results are returned at the top. This is an easy-to-explain concept. In the best situation, you run a query and the search engine “reads” your mind, returning exactly the documents you were looking for.

Precision vs. recall: Precision refers to how many of your results are relevant (the quality of your results), while recall means how many of the total returned results are relevant (the quantity or completeness of the results).

Analyzers, tokenizers and filters: When data is indexed or searched, an analyzer examines the text and generates a token stream. Transformations are then applied via filters to try to match queries with the indexed data. You need to understand these if you want a deeper grasp of search engine technology. Luckily, you can start creating an application with just a high-level overview.

Understanding the Data

I need to understand the data in order to model the index. For this, I’ll open Posts.xml and analyze it.

Here’s what the data looks like. The posts node contains many row child nodes. Each child node corresponds to one record, with each field exported as an attribute in each child node. The data is pretty clean for ingesting into the search engine, which is good:

At a glance, I can quickly observe how there are fields of different types. There’s a unique identifier, a few dates, a couple of numeric fields, some long and some short text fields, and some metadata fields. For brevity, I edited out the Body, which is a large text field, but all others are in their original state.

Configuring Solr to Use a Classic Schema

There are several ways to specify the structure of the data for the index. By default, Solr uses a managed schema, which means it uses schemaless mode. But I want to construct the schema manually—what’s called a classic schema—so I need to make a few configuration changes. First, I’ll create a folder to hold my index configuration, which I’ll call msdnarticledemo. The folder is located in <solr>serversolr, where <solr> is the folder where I unzipped Solr.

Next, I create a text file at the root of this folder called core.properties, which needs only the following line added: name=msdnarticledemo. This file is used to create a Solr core, which is just a running instance of a Lucene index. You might hear the word collection, too, which can have a different meaning depending on the context. For my current intent and purposes, a core is the equivalent of an index.

I now need to copy the contents of a sample clean index to use as a base. Solr includes one in <solr>serversolrconfigsets_default. I copy the conf folder into msdnarticledemo.

In the very important next step, I tell Solr I want to use a classic schema; that is, that I’ll manually edit my schema. To do this, I open solrconfig.xml, and add the following line:

Additionally, still within this file, I comment out two nodes, the updateRequestProcessorChain with:

Those two features are what allow Solr to add new fields while indexing data in schemaless mode. I also remove the comment inside this xml node, given that “--” isn’t allowed within xml comments.

Finally, I rename managed-schema to schema.xml. Just like that, Solr is ready to use a manually created schema.

Creating the Schema

The next step is to define the fields in the schema, so I open schema.xml and scroll down until I find the definition of id, _text_, and _root_.

This is how each field is defined, as a <field> xml node that contains:

- name: The name for each field.

- type: The type of the field; you can modify how each type is handled from within schema.xml.

- indexed: True indicates this field can be used for searching.

- stored: True indicates this field can be returned for display.

- required: True indicates this field must be indexed, else an error is raised.

- multiValued: True indicates this field may contain more than one value.

This may be confusing at first, but some fields can be displayed but not searched and some can be searched but might not be able to be retrieved after indexing. There are other advanced attributes, but I won’t get into their details at this point.

Figure 2 shows how I define the fields in the schema for Posts. For text types I have a variety, including string, text_get_sort, and text_general. Text search is a major objective of search, hence the different text-capable types. I also have a date, an integer, a float and one field that holds more than one value, Tags.

Figure 2 Fields in Schema.xml

What comes next may vary depending on the implementation. At the moment, I have many fields and I can specify which one I want to search on and how important that field is relative to the other fields.

But to start, I can use the catch-all field _text_ to perform a search over all my fields. I simply create a copyField and tell Solr that the data from all fields should be copied into my default field:

Now, when I run a search, Solr will look within this field and return any document that matches my query.

Next, I restart Solr to load the core and apply the changes, by running this command:

I’m now ready to start creating a C# application.

Getting SolrNet and Modeling the Data

Solr provides a REST-like API that you can easily use from any application. Even better, there’s a library called SolrNet (bit.ly/2XwkROA) that provides an abstraction over Solr, allowing you to easily work with strongly typed objects, with plenty of functionality to make search application development faster.

The easiest way to get SolrNet is to install the SolrNet package from NuGet. I’ll include the library in the new Console Application I created using Visual Studio 2017. You may also want to download additional packages, such as SolrCloud, that are required for using other inversion control mechanisms and for additional functionality.

Within my console application, I need to model the data in my index. This is quite straightforward: I simply create a new class file called Post.cs, as shown in Figure 3.

Figure 3 Post Document Model

This is nothing more than a plain old CLR object (POCO) that represents each individual document in my index, but with an attribute that tells SolrNet to which field each property maps.

Creating a Search Application

When you create a search application, you typically create two separate functionalities:

The indexer: This is the application I need to create first. Quite simply, to have data to search, I need to feed that data to Solr. This may involve reading the data in from multiple sources, converting it from various formats and more, until it’s finally ready for search.

The search application: Once I have data in my index, I can start working on the search application.

With both, the first step requires initializing SolrNet, which you can do using the following line in the console application (make sure that Solr is running!):

I’ll create a class for each functionality in my application.

Building the Indexer

To index the documents, I start by obtaining the SolrNet service instance, which allows me to start any supported operations:

Next, I need to read the contents of Posts.xml into an XMLDocument, which involves iterating over each node, creating a new Post object, extracting each attribute from the XMLNode and assigning it to the corresponding property.

Note that within the field of information retrieval, or search, data is stored denormalized. In contrast, when you’re working with databases, you typically normalize data to avoid duplication. Instead of adding the name of the owner to a post, you add an integer Id and create a separate table to match the Id to a Name. In search, however, you add the name as part of the post, duplicating data. Why? Because when data is normalized, you need to perform joins for retrieval, which are quite expensive. But one of the main objectives of a search engine is speed. Users expect to push a button and get the results they want immediately.

Now back to creating the post object. In Figure 4, I’m going to show only three fields as adding others is quite easy. Please notice how Tags is multivalued and that I’m checking for null values to avoid exceptions.

Figure 4 Populating Fields

Once I’ve populated the object, I can add each instance using the Add method:

Alternatively, I can create a posts collection, and add posts in batches using AddRange:

Either approach is fine, but it has been observed in many production deployments that adding documents in batches of 100 tends to help with performance. Note that adding a document doesn’t make it searchable. I need to commit:

Now I’ll execute and, depending on the amount of data being indexed and the machine on which it’s running, it may take anywhere from a few seconds to a couple of minutes.

Once the process completes, I can navigate to the Solr Admin UI, look for a dropdown on the middle left that says Core Selector and pick my core (msdnarticledemo). From the Overview tab I can see the Statistics, which tell me how many documents I just indexed.

In my data dump I had 25,488 posts, which matches what I see:

Now that I have data in my index, I’m ready to start working on the search side.

Searching in Solr

Before jumping back into Visual Studio, I want to demonstrate a quick search from Solr’s Admin UI and explain some of the parameters that are available.

In the msdnarticledemo core, I’ll click on Query and push the blue button at the bottom that says Execute Query. I get back all my documents in JSON format, as shown in Figure 5.

Figure 5 A Query in Solr via the Admin UI

So, what exactly did I just do and why did I get back all documents in the index? The answer is simple. Take a look at the parameters—the column with Request-Handler (qt) at the top. As you can see, one parameter is labeled q, and it has a value of *:*. That’s what brought up all the documents. In fact, the query I ran was to search all fields for all values, using a key-value pair. If instead I only wanted to search for Solr in the Title, then the q value would be Title:Solr.

This is quite useful for building more complicated queries that provide different weights for each field, which makes sense. A word or a phrase that’s found in a Title is more important than one in the content. For example, if a document has Enterprise Search in the Title, it’s highly likely that the entire document is going to be about enterprise search. But if I find this phrase in any part of the Body of a document, it may be just a reference to something barely related.

The q parameter is probably the most important one, as it retrieves documents in order of relevance by calculating a score. But there are a number of other parameters you can use via the Request Handler to configure how requests are processed by Solr, including filter query (fq), sort, field list (fl) and many more that you can find in the Solr documentation at bit.ly/2GVmYGl. With these parameters you can start building more complicated queries. It takes some time to master, but the more you learn, the better relevancy rankings you’ll get.

Keep in mind that the Admin UI is not how an application works with Solr. For that purpose, the REST-like interface is used. If you look right above the results, there’s a link in a gray box that contains the call for this particular query. Clicking on it opens a new window, which holds the response.

This is my query:

SolrNet makes calls like this under the hood, but it presents objects I can use from my .NET application. Now I’ll build a basic search application.

Building the Search Application

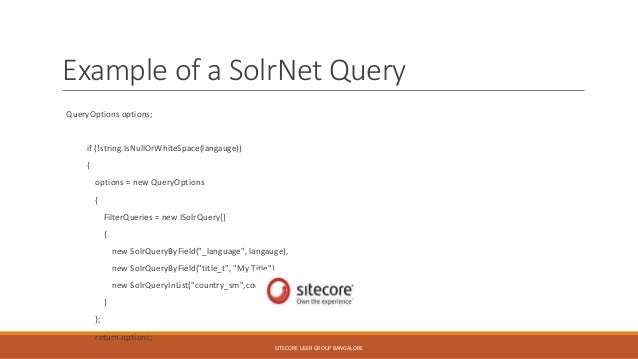

In this particular case, I’m going to search the questions in my dataset, in all fields. Given that the data contains both questions and answers, I’ll filter by the PostTypeId, with “1,” which means it’s a question. To do this I use a filter query—the fq parameter.

Additionally, I’ll set some query options to return one page of results at a time, namely Rows, to indicate how many results, and StartOrCursor, to specify the offset (start). And, of course, I’ll set the query.

Figure 6 shows the code required to run a basic search, with query being the text for which I’m searching.

Figure 6 Running a Basic Search

After running the query, I get posts, which is a SolrQueryResults object. It contains a collection of results, as well as many properties with multiple objects that provide additional functionality. Now that I have those results, I can display them to the user.

Narrowing Down the Results

In many cases, the original results can be good, but the user may want to narrow them by a particular metadata field. It’s possible to drill down by a field using facets. In a facet, I get a list of key value pairs. For example, with Tags I get each tag and how many times each one occurs. Facets are commonly used with numeric, date or string fields. Text fields are tricky.

To enable facets, I need to add a new QueryOption, Facet:

Now I can retrieve my facets from posts.FacetFields[“tags”], which is a collection of key-value pairs that contain each particular tag, and how many times each tag occurs in the result set.

Then I can allow the user to select which tags to drill into, reducing the number of results with a filter query, ideally returning relevant documents.

Improving Your Search—What’s Next?

Solrnet Indexing Example

So far, I’ve covered the essentials of implementing basic search in C# with Solr and SolrNet using the questions from one of the StackExchange sites. However, this is just the start of a new journey where I can delve into the art of returning relevant results using Solr.

Some of the next steps include searching by individual fields with different weights; providing highlighting in results to show matches in the content; applying synonyms to return results that are related but may not contain the exact words that were searched; stemming, which is what reduces words to their base to increase recall; phonetic search, which helps international users; and more.

All in all, learning how to implement search is a valuable skill that can potentially yield valuable returns in your future as a developer.

Solrnet Example Medical

To accompany this article, I’ve created a basic search project, as shown in Figure 7, that you can download to learn more about enterprise search. Happy searching!

Figure 7 A Sample Search Project

Xavier Morerahelps developers understand enterprise search and Big Data. He creates courses at Pluralsight and sometimes at Cloudera. He worked for many years at Search Technologies (now part of Accenture), dealing with search implementations. He lives in Costa Rica, and you can find him at xaviermorera.com.

Thanks to the following technical experts for reviewing this article: Jose Arias (Accenture), Jonathan Gonzalez (Accenture)

Jose Arias is passionate about search- and big data-related technologies, especially those used in data analysis. He is a senior developer at Accenture Search & Content Analytics. https://www.linkedin.com/in/joseariasq/

Jonathan Gonzalez <(j.gonzalez.vindas@accenture.com)>

Jonathan Gonzalez is Senior Managing Architect for Accenture, with over 18 years of experience in software development and design, the last 13 specializing in information retrieval, enterprise search, data processing and content analytics. https://www.linkedin.com/in/jonathan-gonzalez-vindas-ba3b1543/

Interaction

In the example below you can see how to use EasyAutocomplete plugin to interact with other elements on website.

When user selects one element from suggestion list, that elements value is inserted into another input. In this case when user selects one of the x-men characters, his/hers real name will appear in second text field.

Solrnet Example C#

HTML:

This example uses function .getSelectedItemData(), that simply returns selected item data. When users selects first item from list, function .getSelectedItemData() returns object {'character': 'Cyclops', 'realName': 'Scott Summers'}.

So in this example when user selects one of the characters from autocomplete list, function .getSelectedItemData() returns the data item and lets aquire other data fields, in this case realName.

Function onSelectItemEvent is invoked when item from autocomplete list is selected(read more in guide - event). This function first gets selected item data and inserts this value in another text field '#inputTwo'.

Field '#inputTwo' is cleared, when list disappears, so when event onHideListEvent is fired.